Research Projects

Autonomous Systems Group

Dr. Ufuk Topcu

Automatic detection of low credible information on social media

Mentor: Arash Amini

amini@utexas.edu

In recent years, misinformation has become a pervasive challenge, significantly impacting public discourse and decision-making. Combatting this issue requires identifying and mitigating low-credibility information across social media platforms, a key battleground for spreading misinformation. However, traditional manual labeling processes are time-consuming and challenging to scale, presenting a significant obstacle to timely and comprehensive misinformation management. To address this challenge, this project introduces students to the application of Bidirectional Encoder Representations from Transformers (BERT) in detecting low-credibility information through the innovative approach of contrastive learning.

This educational endeavor aims to deepen students' understanding of the social media information ecosystem, focusing on the processes involved in distinguishing credible from non-credible content. By engaging with this project, participants will gain hands-on experience with datasets from Reddit and Twitter, employing state-of-the-art algorithms to train and fine-tune language models. These models are designed to effectively differentiate between low and high credibility information, equipping students with the skills and knowledge necessary to contribute to the ongoing fight against misinformation in the digital age.

Applying Simple Secure Multi-party Computation to Feedforward Neural Networks

Mentor: Yunhao Yang

yunhaoyang234@gmail.com

Secure multi-party computation (MPC) is a cryptographic technique that allows multiple parties to compute a joint function over their private inputs without revealing their inputs to each other. Consider a machine learning model and a user as two parties, and we want to protect the privacy of the user’s input data from the machine learning model.

The project follows a simple MPC framework in which the user divides the input data into multiple secret shares and sends each share to the model separately. The project objective is to ensure the model cannot decode the original input data while the user gets the correct prediction from the model.

The student will be provided with trained feedforward neural networks for image classification and image datasets. The student is expected to implement a function that divides the raw image data into multiple secret shares. The expected outcome of this project is applying the MPC to protect the image data while preserving the classification accuracy. During the experiment, we can visualize the encrypted images and compare them with the raw image.

Evaluating the impact of curriculum design in goal-conditioned RL

Mentor: Cevahir Koprulu

cevahir.koprulu@utexas.edu

The design of task sequences, i.e., curricula, aims to accelerate teaching reinforcement learning (RL) agents complex behaviors. Given a target task, a common curriculum design approach is to begin with easier tasks and increase the difficulty gradually. This project aims to study the impact of curriculum design in goal-conditioned RL domains by comparing no curriculum, manual curriculum, and automated curriculum design approaches.

The student will be provided with implemented domains and algorithms, and expected to carry out experiments by changing target tasks and tuning parameters of the evaluated algorithms. The final phase of the project aims to visualize the empirical results in qualitative and quantitative ways, such as plotting how curricula progress over training runs and measuring the final performance of trained RL agents, respectively.

Zero-Shot Recognition of Handwritten Characters

Mentor: Tyler Ingebrand

tyleringebrand@gmail.com

Machine learning has demonstrated the potential to recognize objects in complex, high-dimensional images. However, a typical model must be trained on the exact objects it will be used to detect, and cannot be quickly modified to recognize new objects. Using a new technique from my research, computer vision algorithms will be augmented with the ability to recognize new objects from examples, without requiring a retraining period. This project has two parts:

First, the student will train a basic computer vision model on the MNIST handwritten digit training set, a classic computer vision use case. This part is an introduction to supervised learning and computer vision. I expect this part can be completed quickly, but it depends on the student’s past experience.

Second, the student will modify their approach based on my past research, and train a model to recognize new, unseen digits that are not in the training set, based on examples only.

This project requires knowledge of Python.

Safety Assurance in Reinforcement Learning Systems

Mentor: Surya Murthy

surya.murthy@utexas.edu

Ensuring the safety of reinforcement learning (RL) systems is vital for their real-world integration. However, guaranteeing safety during both training and testing phases remains a significant challenge. One way to enforce safety is to shield the RL model by modifying actions when they are unsafe.

In this project, students will work with pre-trained RL behaviors and safety constraints. They will receive Python code to simulate RL behavior and identify violations of safety constraints. The primary objective is to develop a shielding function that enforces safety constraints during testing. This involves implementing a Python function capable of modifying actions to ensure they comply with safety constraints.

If time permits, students may explore modifications to the RL model's reward function to incorporate the shielding mechanism during training.

Sequential Negotiations Among AI Agents

Mentor: Abhishek Kulkarni

abhishek.kulkarni@austin.utexas.edu

Automated negotiation involves designing AI algorithms to negotiate on behalf of humans or entities with the aim of streamlining operations, reducing costs, and achieving better outcomes in complex decision-making scenarios. It finds applications in several problems in robotics and AI, such as coordinating multi-agent systems in disaster response, optimizing supply chain management processes, and enabling negotiation between autonomous vehicles for safe traffic flow. Traditional automated negotiations are often viewed as standalone events where the focus is primarily on the negotiation protocols and strategies. In contrast, sequential negotiation involves a series of negotiations where decisions made in earlier rounds can influence subsequent rounds.

The project aims to compare two state of the art algorithms in sequential negotiation, including one currently under development within our group. Additionally, the project seeks to provide the student with valuable exposure to research methodology and practices.

The student will be tasked with conducting a concise literature review to identify a paper showcasing a state-of-the-art sequential negotiation algorithm. Following this, they will utilize a Python-based automated negotiation simulation platform to investigate the performance of the two algorithms. The student will determine the performance metric, produce results, and analyze these findings. Based on the findings, they will assess the strengths and weaknesses of the two algorithms, and, optionally, offer recommendations for mitigating any identified shortcomings.

Perception and Decision Uncertainty Quantification in Multi-modal Language Models

Mentor: Neel Bhatt

npbhatt@utexas.edu

Robots operate by perceiving their surroundings and determining appropriate actions to accomplish specific tasks. Multimodal foundation models, consisting of a vision encoder and a language generator, hold promise in furnishing robots with these crucial perceptual and decision-making capabilities. However, robots equipped with the two capabilities may fail to complete a task. The failure may result from inaccuracies in vision encoders when encoding image information, such as cars or pedestrians, or limitations in the reasoning capabilities of language generators. Knowing the cause of the failure can guide robots to effectively reduce the number of queries to the model and increase the probability of successful task completion.

The primary objective of this project is to enable students to develop a high-level understanding of vision-language models and uncertainty estimation methods. Students will be able to algorithmically identify scenarios where these models fail and the associated cause of failure.

Students will first perform a literature review on different types of uncertainty quantification algorithms for vision-language models. Subsequently, they will implement a state-of-the-art uncertainty quantification model on a dataset generated in-house. They will investigate the improvement in performance of the vision-language model due to insights from quantification of perception and decision uncertainty. They will also identify scenarios where the approach performs poorly and suggest means to enhance performance. At the end of the project, students will present their explorations via a short talk. The overall experience will provide students with exposure to common research practices and a chance to present their explorations to an audience with research experience.

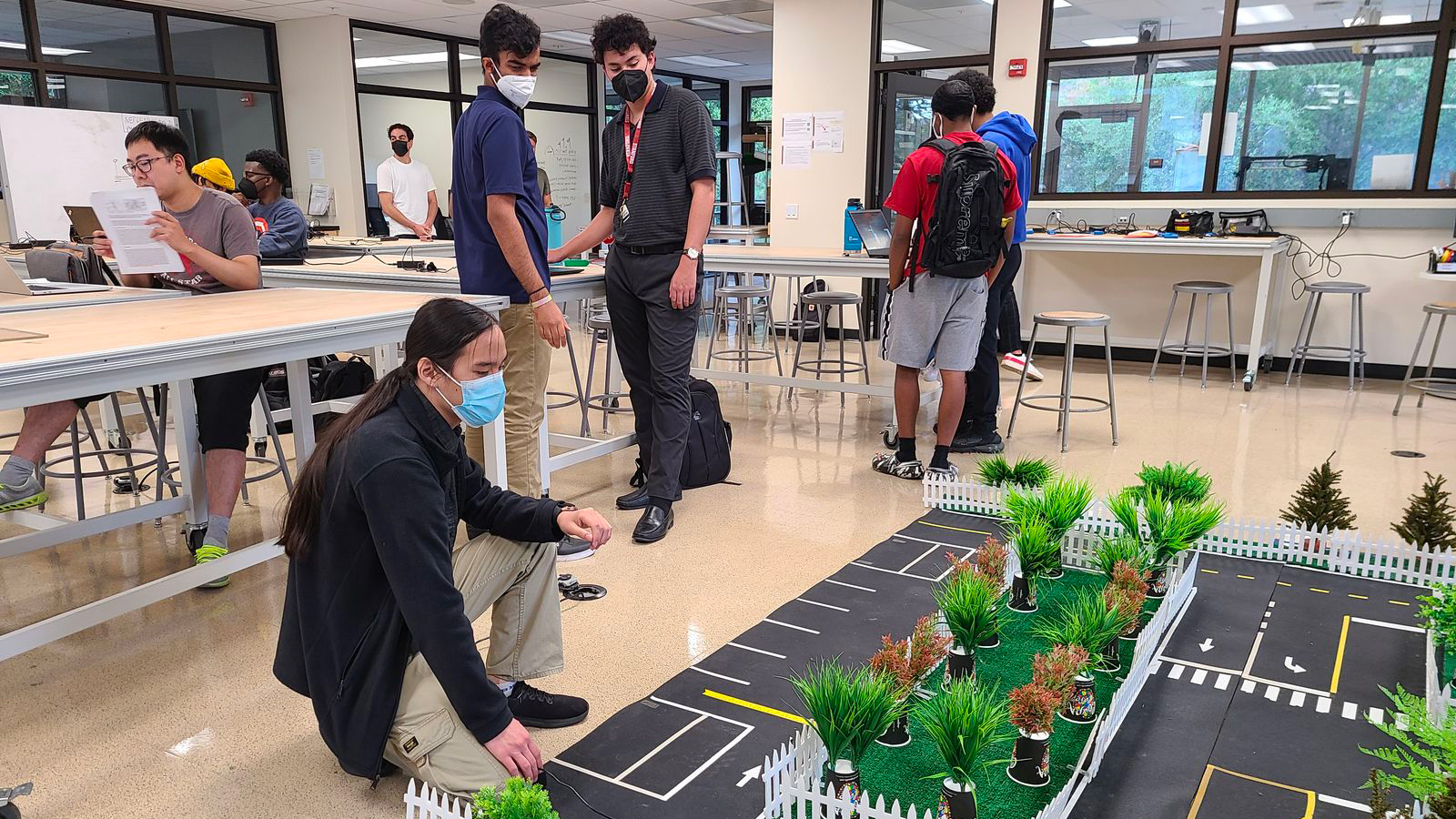

Control and Learning for Autonomous Robotics Group

Dr. David Fridovich-Keil

Collision-free robotic navigation in GPS-denied environments

Mentors: Tianyu Qiu, Dong Ho Lee

tianyuqiu@utmail.utexas.edu

leedh0124@utmail.utexas.edu

Robots are well-suited for remote operations which remove a human from hazards. For example, a robot could be sent for mapping, sample retrieval or search and rescue missions. However, in many of these scenarios, GPS data may not be available to localize the robot or may not be useful in avoiding unmapped obstacles. In these situations, a robot will need to rely on other onboard sensors to ensure collision-free movement.

In this project, algorithms will be devised to autonomously navigate a robot through a winding corridor. The algorithms will utilize LiDAR readings to avoid obstacles and ensure safe passage to the target destination.